How to safeguard your business from AI-generated deepfakes

[ad_1]

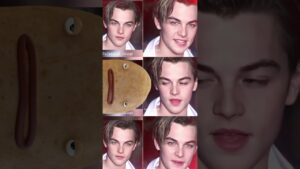

Recently, cybercriminals used ‘deepfake’ videos of the executives of a multinational company to convince the company’s Hong Kong-based employees to wire out US $25.6 million. Based on a video conference call featuring multiple deepfakes, the employees believed that their UK-based chief financial officer had requested that the funds be transferred. Police have reportedly arrested six people in connection with the scam. This use of AI technology is dangerous and manipulative. Without proper guidelines and frameworks in place, more organizations risk falling victim to AI scams like deepfakes.

Deepfakes 101 and their rising threat

Deepfakes are forms of digitally altered media — including photos, videos and audio clips — that seem to depict a real person. They are created by training an AI system on real clips featuring a person, and then using that AI system to generate realistic (yet inauthentic) new media. Deepfake use is becoming more common. The Hong Kong case was the latest in a series of high-profile deepfake incidents in recent weeks. Fake, explicit images of Taylor Swift circulated on social media, the political party of an imprisoned election candidate in Pakistan used a deepfake video of him to deliver a speech and a deepfake ‘voice clone’ of President Biden called primary voters to tell them not to vote.

Less high-profile cases of deepfake use by cybercriminals have also been rising in both scale and sophistication. In the banking sector, cybercriminals are now attempting to overcome voice authentication by using voice clones of people to impersonate users and gain access to their funds. Banks have responded by improving their abilities to identify deepfake use and increasing authentication requirements.

Cybercriminals have also targeted individuals with ‘spear phishing’ attacks that use deepfakes. A common approach is to deceive a person’s family members and friends by using a voice clone to impersonate someone in a phone call and ask for funds to be transferred to a third-party account. Last year, a survey by McAfee found that 70% of surveyed people were not confident that they could distinguish between people and their voice clones and that nearly half of surveyed people would respond to requests for funds if the family member or friend making the call claimed to have been robbed or in a car accident.

Cybercriminals have also called people pretending to be tax authorities, banks, healthcare providers and insurers in efforts to gain financial and personal details.

In February, the Federal Communications Commission ruled that phone calls using AI-generated human voices are illegal unless made with prior express consent of the called party. The Federal Trade Commission also finalized a rule prohibiting AI impersonation of government organizations and businesses and proposed a similar rule prohibiting AI impersonation of individuals. This adds to a growing list of legal and regulatory measures being put in place around the world to combat deepfakes.

Stay protected against deepfakes

To protect employees and brand reputation against deepfakes, leaders should adhere to the following steps:

Educate employees on an ongoing basis, both about AI-enabled scams and, more generally, about new AI capabilities and their risks.

Upgrade phishing guidance to include deepfake threats. Many companies have already educated employees about phishing emails and urged caution when receiving suspicious requests via unsolicited emails. Such phishing guidance should incorporate AI deepfake scams and note that it may use not just text and email, but also video, images and audio.

Appropriately increase or calibrate authentication of employees, business partners and customers. For example, using more than one mode of authentication depending on the sensitivity and risk of a decision or transaction.

Consider the impacts of deepfakes on company assets, like logos, advertising characters and advertising campaigns. Such company assets can easily be replicated using deepfakes and spread quickly via social media and other internet channels. Consider how your company will mitigate these risks and educate stakeholders.

Expect more and better deepfakes, given the pace of improvement in generative AI, the number of major election processes underway in 2024, and the ease with which deepfakes can propagate between people and across borders.

Though deepfakes are a cybersecurity concern, companies should also think of them as complex and emerging phenomena with broader repercussions. A proactive and thoughtful approach to addressing deepfakes can help educate stakeholders and ensure that measures to combat them are responsible, proportionate and appropriate.

(Photo by Markus Spiske)

See also: UK and US sign pact to develop AI safety tests

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.Explore other upcoming enterprise technology events and webinars powered by TechForge here.

[ad_2]

Source link